Neuroscience Goes Virtual: How to Measure Consumers’ Responses in Extended Realities

A new environment for consumer behavior

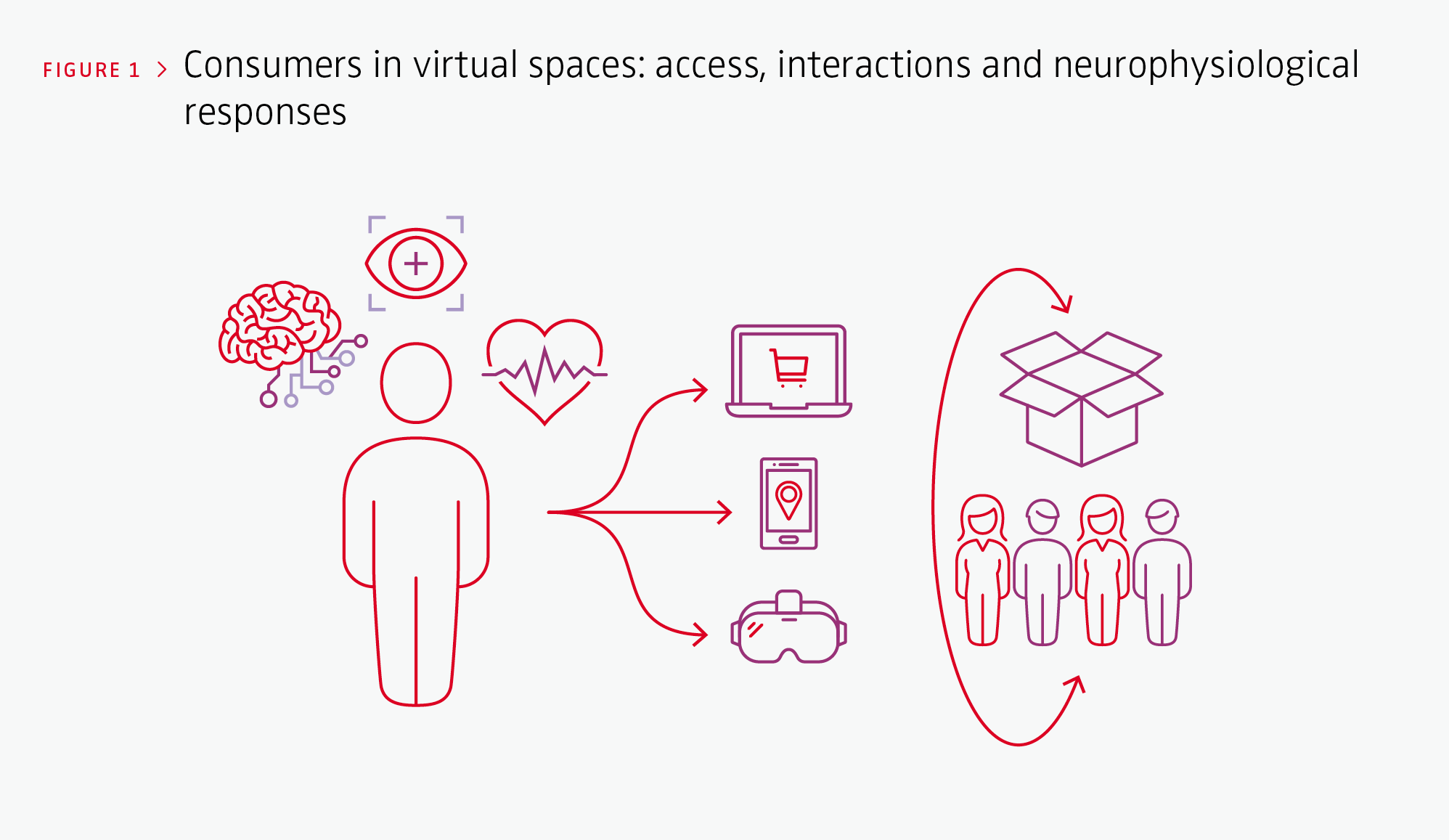

The metaverse is attracting renewed interest due to fascinating possibilities in virtual spaces. While its boundaries are yet to be defined, the main types of foreseen actions in the metaspace are clear. People will interact with others, including simulated people and avatars, in an advanced form of social media. People will also interact with objects such as products. These interactions will take place in any virtual or augmented space that mimics a natural or invented environment. Transporting people to such an environment requires an interface that can evoke a sense of physical presence in the space. This can be delivered through three device types: computer monitors, small and portable screens such as smartphones and head-mounted display (HMD) glasses. Each type creates different levels of immersion into the environment, and technological advancements are increasingly fueling the development of lighter and more powerful devices for virtual reality. Even as extended realities open up new spaces, little is known about how consumers behave in such environments. Consumer neuroscience tools are a promising way to learn more about the interactions between people and objects. Figure 1 visualizes how consumers access extended reality where they interact. It also shows the physiobiological responses such as heart rate, eye movements and brain response that can be tracked.

Exploring how consumers behave in virtual spaces

Pinpointing customer responses has always been challenging for marketers and academic researchers. Attempts to measure consumer reactions through means other than employing questionnaires are becoming popular. Big data is characterized by a massive dataset of input that is short in length and is not conditioned by a closed question. In addition, neuroscience delivers microdata, which represents signals that are more detailed, deeper and collected over time. Neuroscience tools have two main benefits: they capture unconscious and emotional reactions and continuously measure the subject’s responses. Since the metaverse embraces human-to-human and human-to-object interactions through computers, smartphones and HMDs, it seems sensible to adopt a deeper measure for capturing users’ emotions and unconscious responses.

The multiple applications of extended reality in consumer research are ubiquitous and promising.

Using neuroscience in extended realities

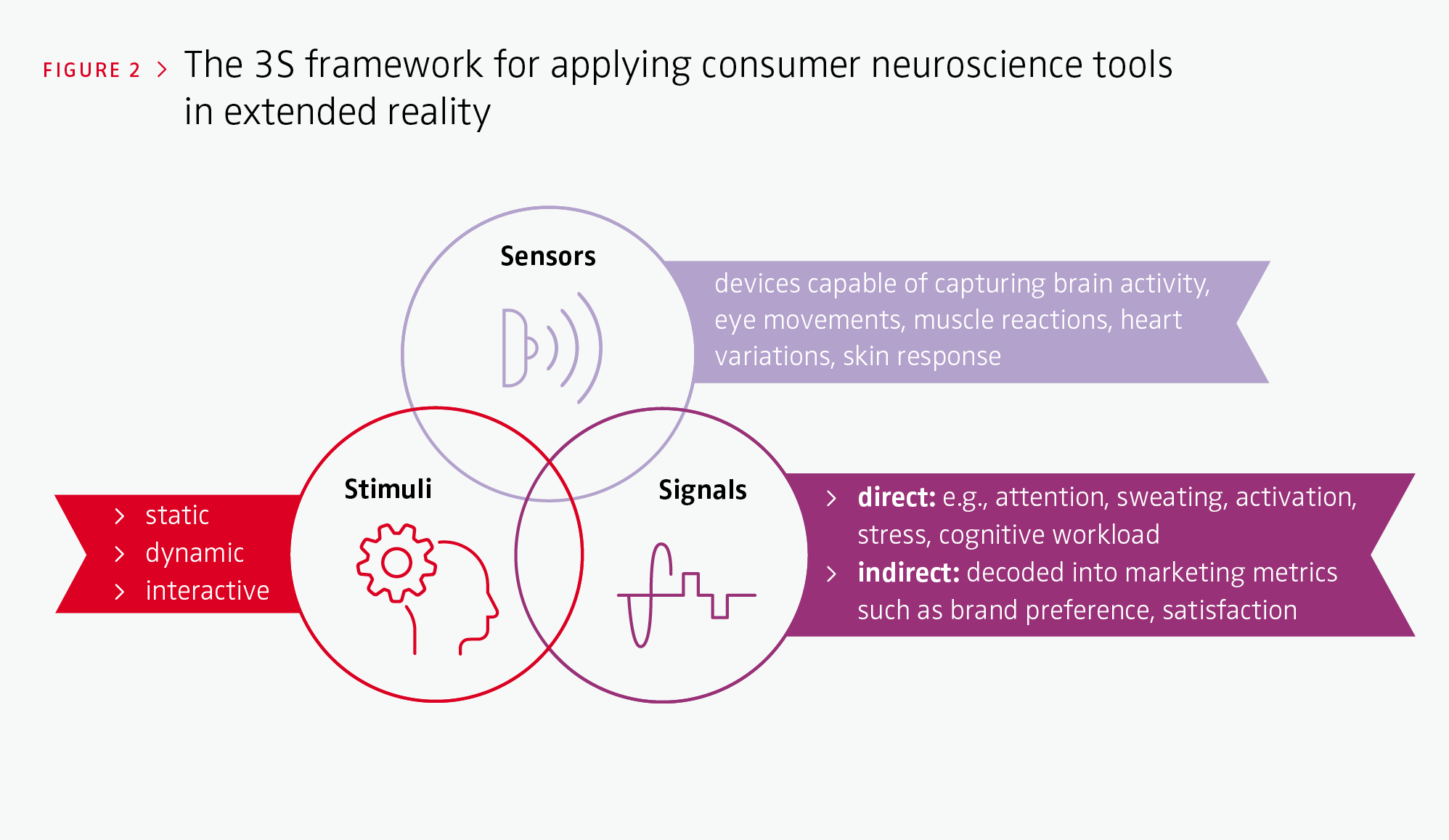

While the use of neuroscience tools is becoming popular in marketing research, applying these tools to extended realities is challenging for two main reasons. First, technological developments in extended reality must address the artifacts’ signals by integrating them or making them compatible. Second, isolated neuroscience measurements must be synchronized into a single data source. Further, integrating data from diverse neuroscience signals, behavioral interactions and self-reports is challenging. The essence of this new kind of rich-quality data environment is based on what we call the 3S framework. 3S stands for Stimuli, Sensors and Signals (see Figure 2).

> Stimuli

The influence of individual stimuli on brand choices constitutes essential insight for redefining products, launching new brands and selecting advertising appeals. In an extended reality environment, stimuli can comprise static elements like a door or landscape, dynamic content like 2D or 360° videos and content that changes with different user interactions (e.g., layouts and shapes). Each type of stimulus is a rich source of information for the researcher and can be manipulated as an experimental research instrument. For example, on a shelf, the size, color, position and number of products can be changed according to the objectives. Other stimuli, such as offers, promotional gadgets, advertising formats and store layouts, as well as sensory stimuli, such as music and noise, can be easily modified in a virtual reality setting. Furthermore, try-on or smart mirrors also expand the array of stimuli in virtual spaces.

The use of neuroscience tools is becoming popular in marketing research, but applying these tools to extended realities is challenging.

> Sensors

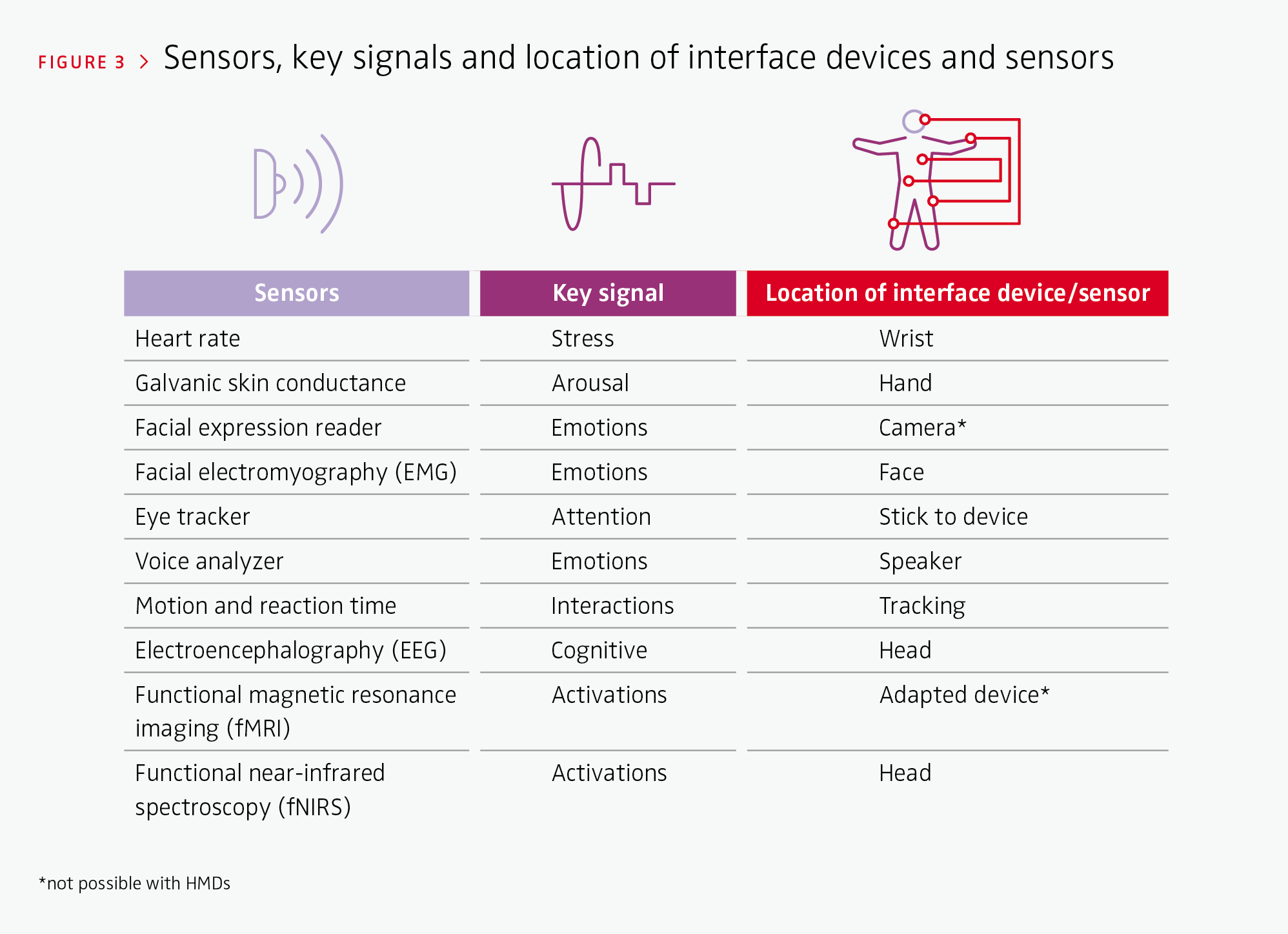

In the neuroscience domain, sensors refer to any device capable of capturing a signal from the central and peripheral nervous systems, including the autonomic and somatic nervous systems. It is possible to track electrical and blood variations in brain areas as well as movements, muscle activity, heart rate variations and skin response. Figure 3 shows that many sensors can be integrated into or connected with interface devices.

> Signals

Ultimately, signals refer to any physiological or brain response that indicates a reaction, including attention, sweating, activation, stress, cognitive workload or withdrawal. These signals, called direct signals, must be decoded into meaningful marketing metrics or indirect signals, such as brand preference, satisfaction, or positive/negative consumer emotions. Collaborative scientific research should, thus, continue to search for links between direct and indirect signals. While new developments are being made, however, marketing researchers already have available tools to make such research feasible.

Neuroscience tools in different types of extended realities

The three types of interface paths depicted in Figure 1 determine not only the level of user immersion but also the variety of sensors available for capturing users’ unconscious reactions. Figure 3 shows which sensors can be used with different interface devices, where the sensors can be attached and the signals they can capture. All sensors work with monitors and smartphones. The sensors can also be used with HMDs, except those for face reading and fMRi. Ongoing developments will allow us to measure emotions through electromyographs attached to VR helmets.

> Neuroscience in augmented reality

Since augmented reality superimposes virtual objects onto a live environment, applying neuroscience tools to users does not demand additional technology. An array of equipment is already available on the market, and costs are affordable. Retail solutions such as fitting rooms, try-ons and large video walls require specific sensors because of the type of screen. However, visualizations through current PC monitors or smartphones are easy to implement. On the other hand, a wearable screen, such as a smartphone, may struggle to capture homogenous signals due to changes in viewing angles.

> Neuroscience in virtual reality environments

In virtual stores, museums and restaurants, user interactions with products through their body and hand movements, stops and revisits can be tracked and analyzed. Tracking provides a valuable opportunity for linking consumer neuroscience tools with behavior in specific situations, such as visiting a virtual store or measuring deliberated versus impulsive buying processes. Again, using PC monitors to apply neuroscience tools to virtual reality environments is relatively easy and affordable to implement. Existing technology can also be utilized with HMDs. Some suppliers embed sensors into their glasses that could be used for data collection. For instance, eye tracking is possible with HTC or Varjo glasses. Further, wearable devices embedded into the human body are becoming common and could overcome the technical barriers of static devices. However, facial readers are not feasible with HMDs as most of these devices limit the visible parts of a face. Still, facial electromyography could be used, and new technological integrations of sensors are emerging in many glasses, e.g., those from Varjo and Galea solutions and from emteq labs. EEG and fNIRS have been successfully applied to humans wearing HMDs; however, the combination of fMRI and HMDs needs further development in the consumer field.

> Neuroscience in mixed reality

Mixed reality combines augmented and virtual reality and also uses glasses. Therefore, most of the points mentioned earlier on HMDs also apply to mixed reality. For instance, HoloLens 2 provides eye tracking through two infrared cameras and head tracking through four light cameras. However, since this technology is more recent, the costs are still high.

Consumer research applications in extended realities

The multiple applications of extended reality in consumer research are ubiquitous and promising. Regardless of type – augmented, virtual or mixed – they open a fascinating window for research in multiple directions. Extended reality can be used to facilitate pretesting and purchase tests. Pretests include new products or interior designs. In-body product preference can be tested through makeup, glasses or sneakers virtual try-on apps. Moreover, purchase tests within a product category are possible by displaying the product range on a virtual shelf where a researcher may analyze the assortment, price differences and packaging options. Marketing research can also focus on the virtual presence of other persons like friends, salespeople, experts or influencers that might change consumer decisions. In contrast to conducting such tests in physical space, virtual worlds also have the advantage of being able to modulate ambient conditions like lighting, time of day, townscapes or weather conditions. To summarize, the ingredients for conducting consumer neuroscience-based research on augmented reality and metaverse users are already available, and technological developments are fueling continuous improvements.

FURTHER READING

Bigné, E. (2023). Combined use of neuroscience and virtual reality for business applications. In L. Moutinho & M. Cerf (Eds.) Biometrics and neuroscience research in business and management: Advances and applications. De Gruyter.

Wedel, M., Bigné, E., & Zhang, J. (2020). Virtual and augmented reality: Advancing research in consumer marketing. International Journal of Research in Marketing, 37(3), 443–465.