Research

There's Fair and there's Fair – Interaction Between Humans and Machines

Digital (voice) assistants like Cortana, Alexa, or Siri are becoming an integral part of our daily life. Even in decision situations, e.g., consumer consumptions or investment decisions, artificial agents make suggestions and, thus, try to support humans in making better decisions. Additionally, developers are working on it to make these assistants more and more human-like by giving them names, a voice or letting them mimic human behavior like the usage of natural language or empathy.

In human interaction, e.g., negotiations or economic transactions, social norms, and preferences play an important role. But is this still the case when humans interact with machines? In a world with a growing number of anonymous and automated economic interactions and transactions, this is becoming more and more relevant.

In lab experiments, we investigate how decisions change when humans interact with computer agents like intelligent decision support systems or conversational agents instead of humans and what impact human characteristics (e.g., a voice, name, human appearance, or even a personality) of the system may have.

In a first project, we study the fundamental relation of anthropomorphism and decision-making in social interactions with voice-based computerized agents.

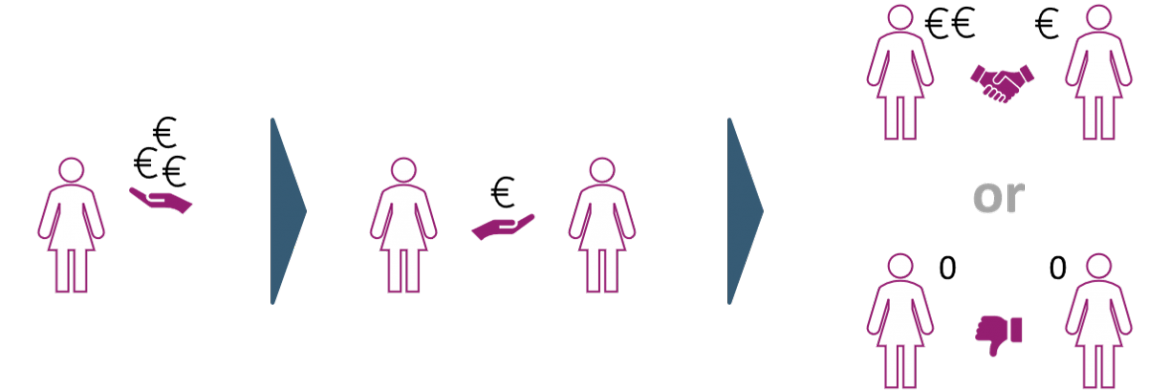

We conducted a so-called "ultimatum-game", where individuals could split an amount of money between themselves and another person. If the other person accepts this allocation, both will be rewarded accordingly. If the offer is rejected, both receive nothing. In a series of trials, we investigate whether the acceptance behavior with respect to unfair offers changes if the offer is made by a computer agent instead of another human individual and is made via a voice message instead of the standard method, a text message.

In a second project, we extend our setting by additionally giving the computer agent a human appearance in virtual reality.

With this project, we aim to gain a fundamental understanding of how humans make decisions in social interactions with human-like computer agents. The results provide implications for economic decision situations where consumers interact with AI-based smart machines like chat-bots as a first touch point in a customer journey or AI-based voice agents in call centers, e.g., when complaining about products or services.

Key Results

- Humans are more likely to accept unfair offers from machines than from humans.

- However, human characteristics in machines lead to a tendency to reject unfair offers.

- This is especially observed with human appearance (avatar in VR).

- Effect of voice tends to be weak and needs further investigation.

Project team

- Dr. Matthias Unfried, Head of Behavioral Science, NIM, matthias.unfried@nim.org

- Dr. Michael K. Zürn, Senior Researcher, NIM, michael.zuern@nim.org

Cooperation partner

- Prof. Alessandro Innocenti, University of Siena

Contact