Research

Transparency Without Trust

Generative AI offers unprecedented efficiency in marketing content creation. However, the EU’s new AI-labeling rule presents a challenge: Research shows that consumers become more skeptical and less engaged when they know content is AI-generated. How can marketers build trust and unlock AI’s potential?

Marketers are rapidly adopting generative AI tools to produce ads, social media posts, and other content at scale. By 2024, a research study by the Nuremberg Institute for Market Decisions (NIM) found that 100% of 600 surveyed marketing professionals were using AI in their activities, attracted by the technology’s promise of efficiency and the high quality of the output (Buder et al., 2024).

However, while AI technology has rapidly advanced, regulations have struggled to keep up. A significant develoment in this area is the EU’s upcoming requirement to label AI-generated content (Generaldirektion Kommunikation, 2024). This regulation aims to increase transparency and consumer trust but also introduces new challenges for businesses as early signals from the market highlight consumer skepticism toward AI-generated content. For example, Coca-Cola’s 2023 attempt to remake its iconic holiday advertisement with generative AI was met with public backlash. Viewers derided the AI-crafted spot as “soulless” despite the company’s praise for the technology’s efficiency.

Such reactions underscore a key managerial challenge: How does labeling content as “AI-generated” infuence consumer perception? Could labeling actually reduce consumer engagement rather than enhance trust? Understanding these effects is crucial for companies that rely on AI for advertising, branding, and customer engagement.

Consumer Trust in AI Is Low

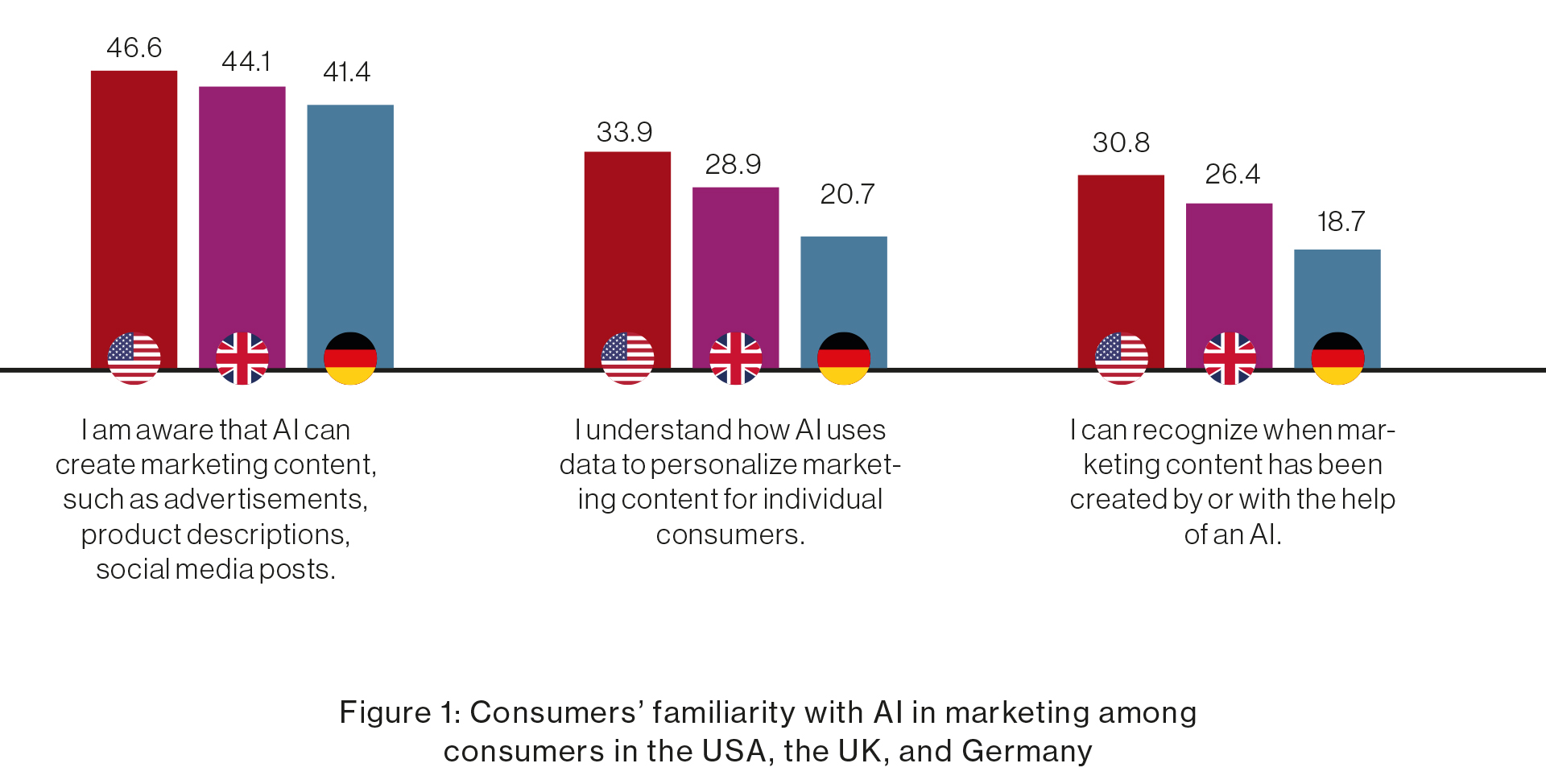

To explore these effects, a team of NIM researchers conducted a series of controlled online experiments. In a representative study, the team surveyed 1,000 respondents in each of the USA, the UK, and Germany to explore what people know about AI in marketing and how they feel about AI in general and about its use in marketing. The data shows that on average, 44% of the participants are aware that AI can create marketing content like ads or social media posts. However, only about 28% of the participants understand how personal data is used by AI for personalization of marketing content, and only 25% think that they can recognize AI-generated content.

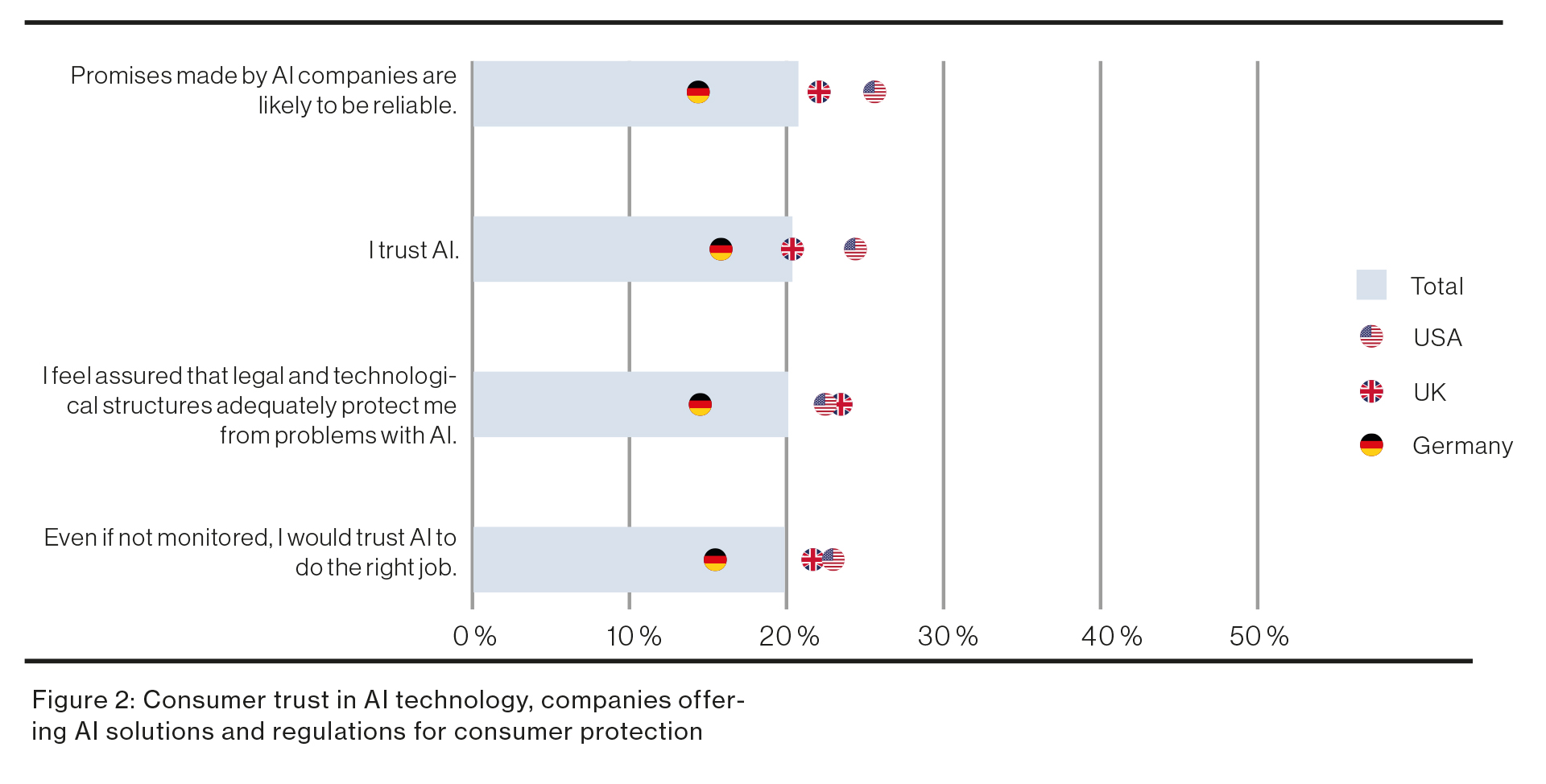

Regarding trust in AI and companies using AI, the study showed that 21% of the respondents trust AI companies and their promises, and only 20% trust AI itself. These findings reveal a notable gap between general awareness of AI in marketing and a deeper understanding or trust in its application. To better understand how such perceptions influence actual consumer responses, we conducted two experiments investigating reactions to AI-generated marketing content and the factors that may shape these responses.

Experiment I

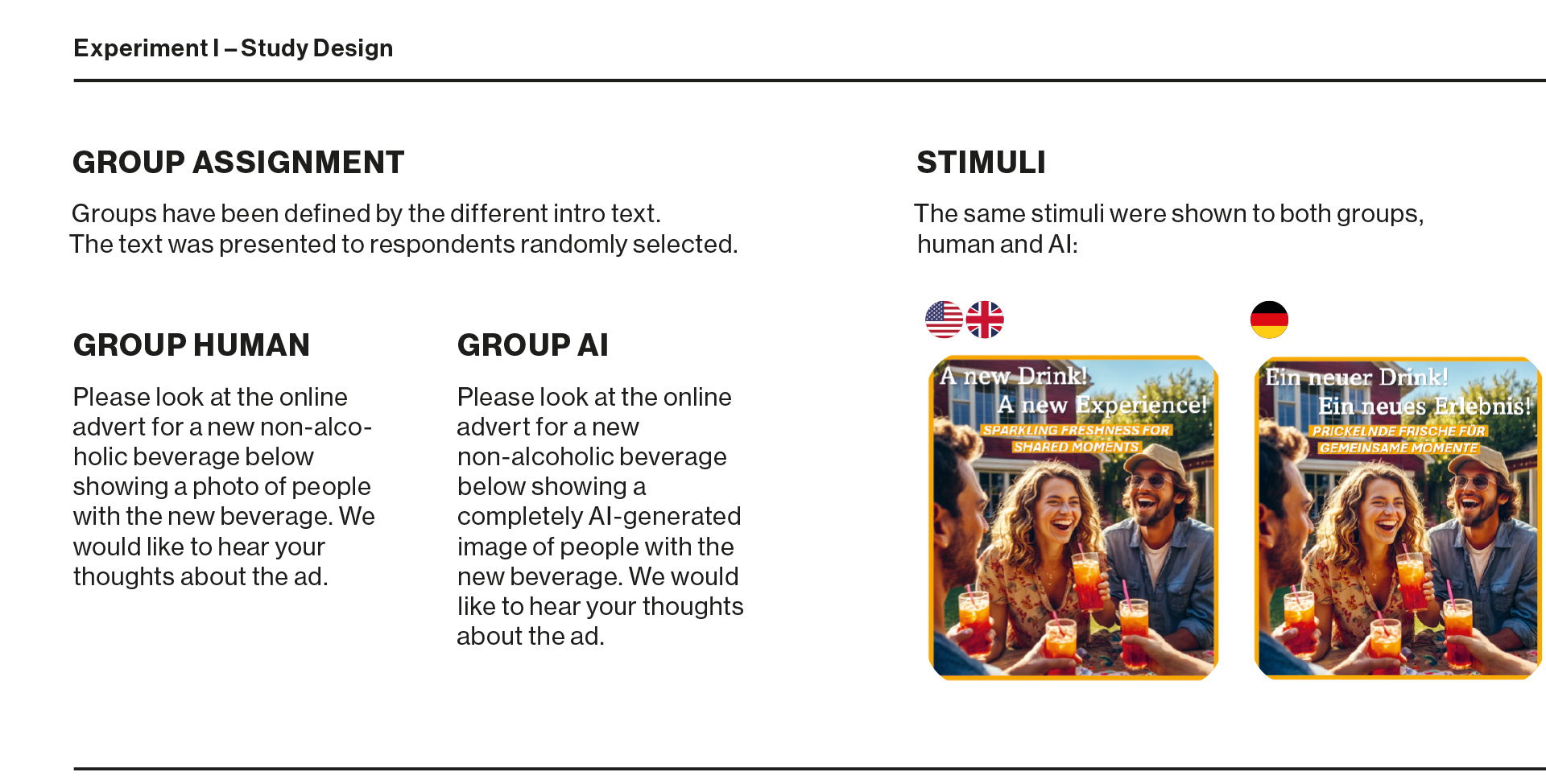

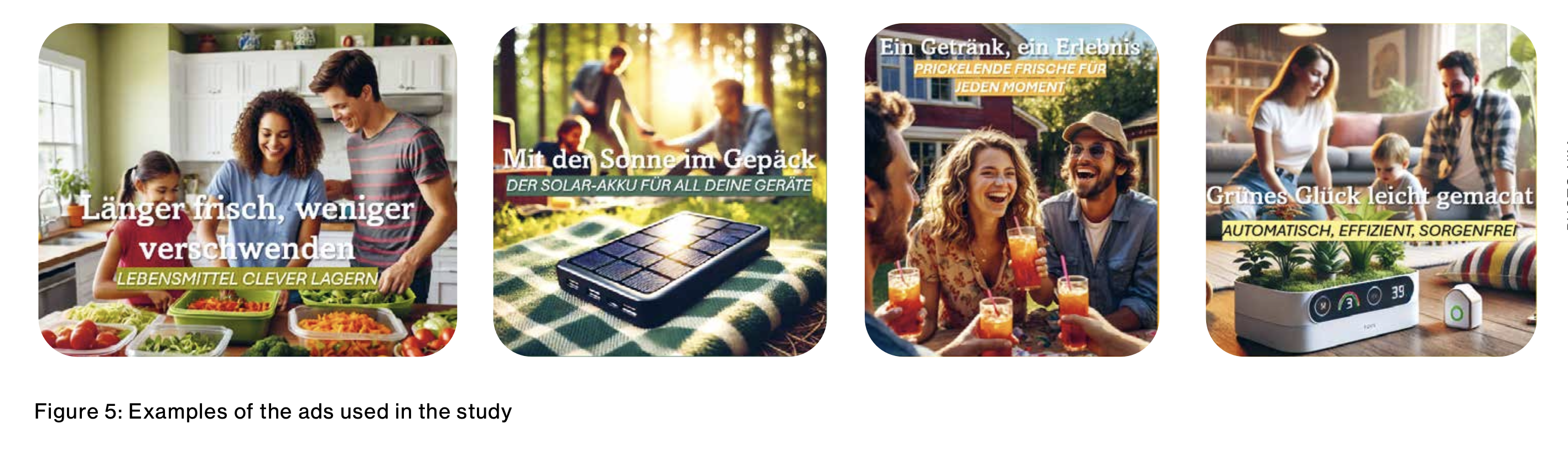

As part of the representative survey, we included a short experiment on AI disclosure in advertising. We showed the participants identical marketing content (a product ad), telling half of them that it was a photo and the other half that it was an AI-generated image. After viewing the ad, participants rated— among other things—its appeal, credibility, emotional impact, and memorability and indicated their willingness to click on learn more about the product.

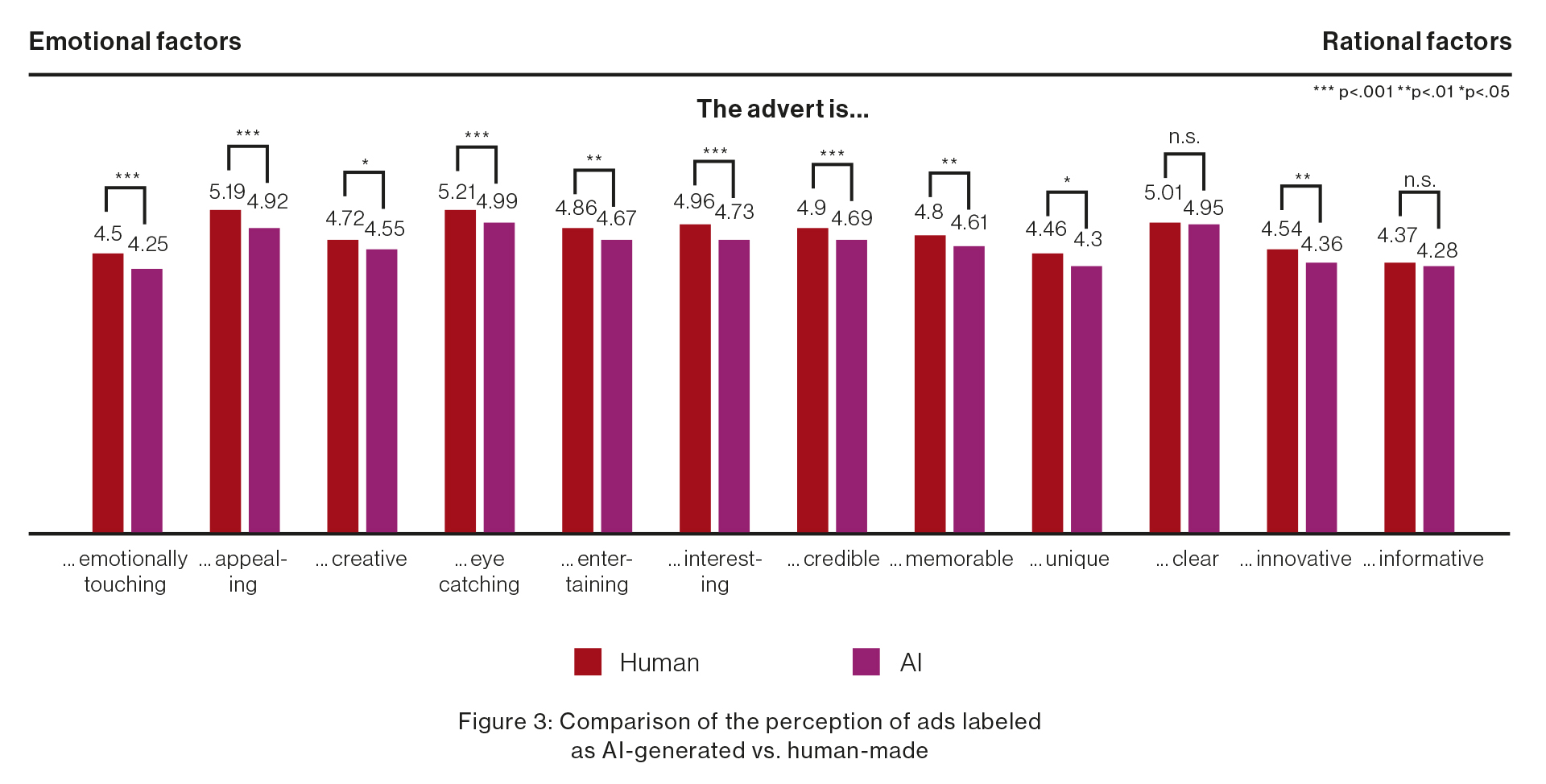

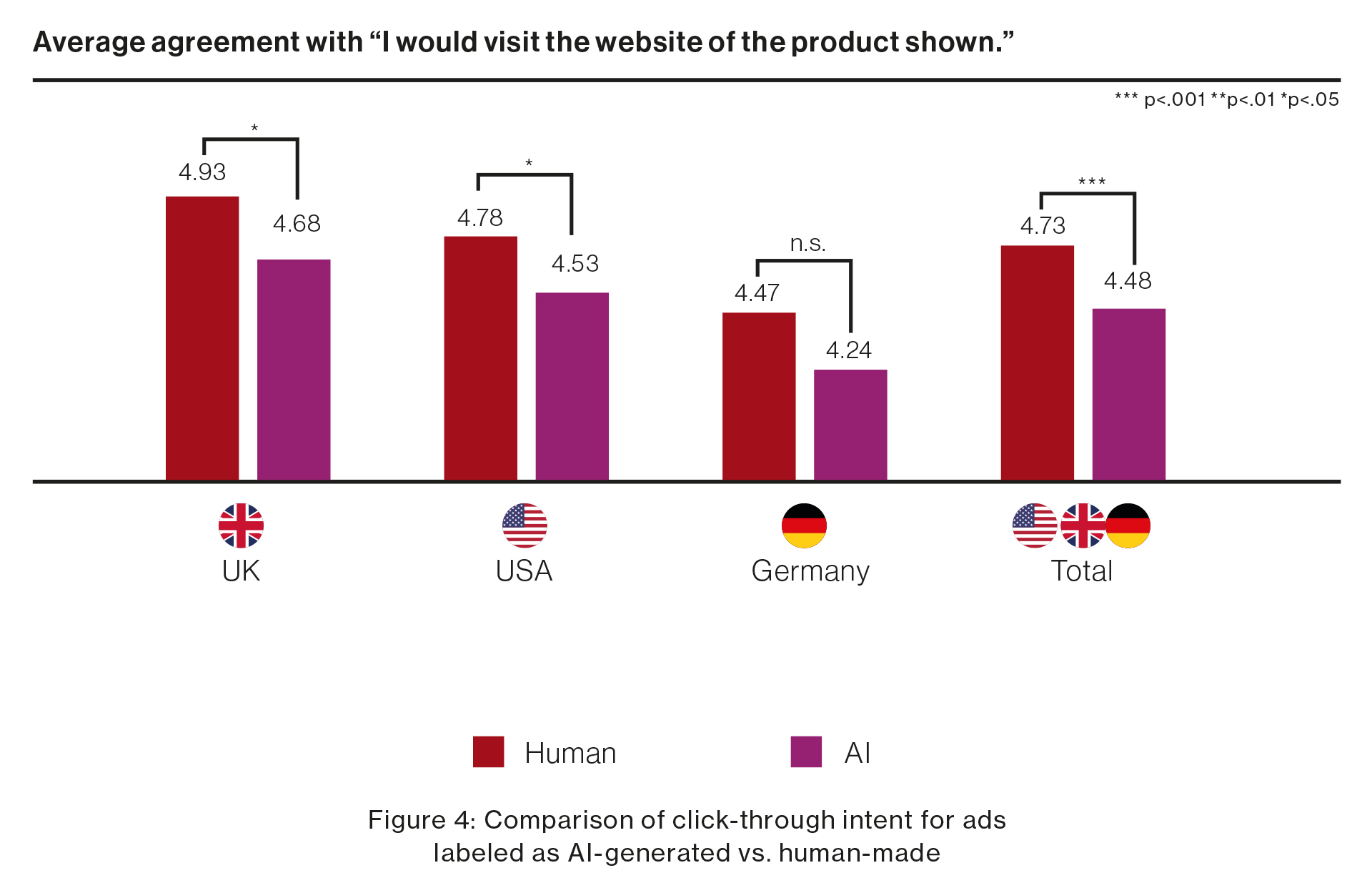

The ad described as AI-made was perceived more negatively than the identical ad presented as human-made—especially regarding emotional aspects. In addition, participants were less inclined to click on or further engage with products featured in AI-generated ads, indicating a drop in engagement and potential conversion. This indicates a small but meaningful impact—while the effect size is not drastic, it clearly signals a consistent preference for human-created content.

Experiment II – AI-labeled advertisements

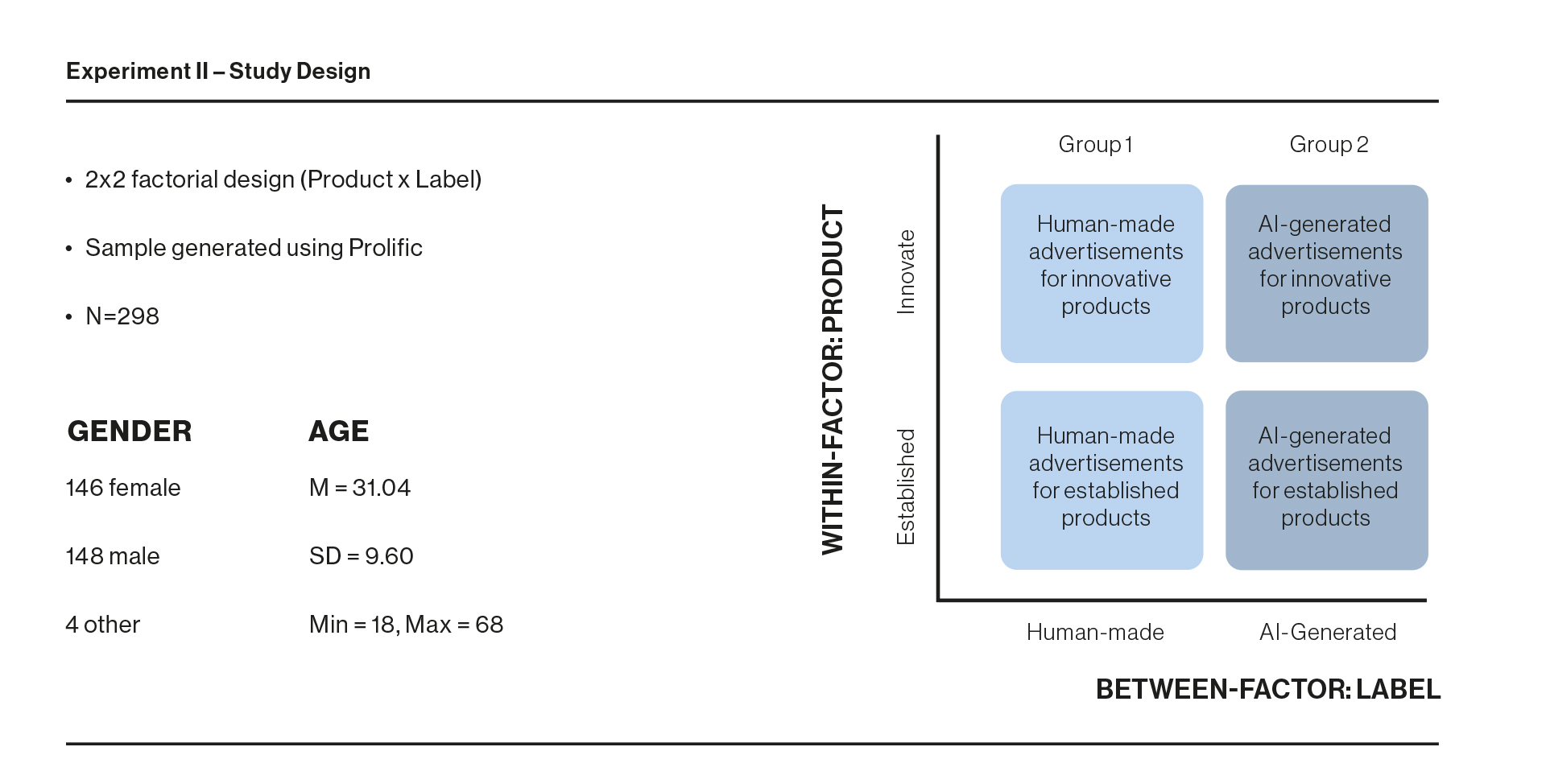

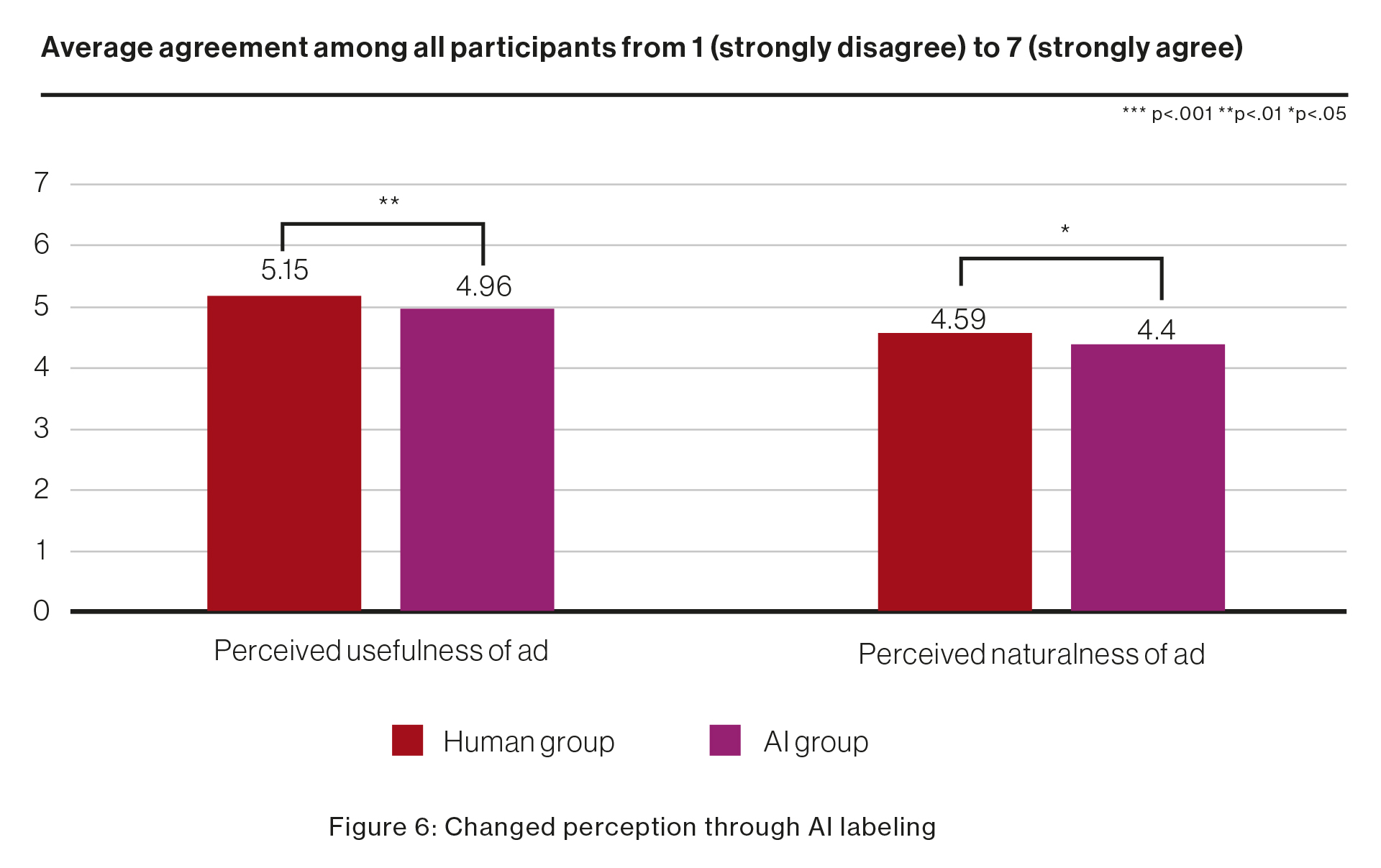

To explore these effects in more detail, the project team NIM researchers conducted a second experiment controlling for product-specific effects. In this study, with German participants only, the participants were divided into two groups and shown the same six advertisements, covering both well-established and innovative products. One group, however, saw the ads labeled as “AI-generated,” while the other group saw them labeled as “human-generated.”

Participants rated the ads based on their perceived usefulness, clarity, and overall appeal, with the goal being to assess whether the label alone influenced how people perceived the ads. Additionally, the study examined factors that might influence these perceptions, such as general trust in AI and personal beliefs about creativity, specifically the attribution of creativity to humans alone.

The study revealed a clear pattern: Labeling an ad as AI-generated led to a more critical evaluation. Consumers tended to see these ads as less natural and less useful, even though the content was identical to the ads labeled as humanmade. This shift in perception had direct consequences on engagement—attitudes toward the ad became more negative, and participants’ willingness to research or purchase the product decreased.

Of note, trust in AI played a significant role in shaping responses. Participants who already had a positive attitude toward AI technology were more open to AI-generated ads and evaluated them more favorably. In contrast, those who strongly believed that creativity is an exclusively human trait showed a stronger negative bias toward AI-generated content.

Interestingly, the impact of an AI label also varied depending on the type of product being advertised. AI-generated ads were more accepted when promoting innovative, high-tech products. In these cases, consumers seemed to perceive AI as a natural fit for futuristic offerings. Conversely, when AI was used in ads for more traditional products, the negative bias was stronger. This suggests that while AI can be effective in marketing, its impact depends on the context in which it is applied.

Notably, the skepticism was lower among participants who reported a higher general trust in AI and technology, indicating that trust in the technology is a key moderating factor in how consumers respond. However, for the average audience, transparency alone (i.e., labeling content as AI-generated) “reveals a fundamental problem but doesn’t solve it.” In essence, making audiences aware an ad is AI-made can alert them to authenticity issues without addressing the root trust deficit. Even the most polished AI content will fall short if the audience’s gut feeling does not trust it.

The Transparency Dilemma: Labeling Content as AI-Generated Leads to Lower Content Ratings and Engagement

In short, simply knowing that a piece of content was crafted by an algorithm—as opposed to by a human creative—made people trust it less and engage with it less enthusiastically. These results suggest that even technically polished AI content faces a “trust penalty”—a bias where consumers react warily when they sense a message was created by a machine. This finding confirms marketers’ intuition that something can feel off or impersonal about machine-made messages, even when content quality is high.

These findings have significant implications for companies using AI-generated content in their marketing strategies. The fact that simply labeling content as AI-generated can lead to a more skeptical response highlights the need for businesses to actively manage consumer perceptions.

One key takeaway is the importance of building trust in AI. The study shows that individuals with higher trust in AI more positively rated AI-generated ads. Companies should therefore focus on increasing familiarity with AI technologies and their benefits to counteract initial skepticism. Educating consumers about AI’s role in enhancing creativity rather than replacing it may also help reduce the bias against AI-generated content.

Another crucial insight is that AI-generated content is more effective when used strategically. Since consumers respond more positively to AI-generated ads for innovative products, businesses should consider aligning their AI-driven marketing efforts with products that already carry a technological or futuristic appeal. For traditional products, alternative approaches—such as blending AI and human creativity—might be more effective.

Conclusion: Transparency vs. Perception

The EU’s AI-labeling requirement presents a challenge for marketers: While transparency is meant to build trust, the reality is more complex. Labeling AI-generated ads can create unintended skepticism, leading consumers to view these ads more critically than necessary.

To navigate this challenge, businesses must go beyond compliance and proactively shape consumer attitudes toward AI. Building trust, addressing biases, and strategically positioning AI-generated content—especially for innovative products—will be key to leveraging AI’s full potential without alienating consumers.

By understanding and addressing these dynamics, companies can ensure that AI remains a powerful asset in marketing rather than a barrier to consumer engagement.

Project team

- Dr. Fabian Buder, Head of Future & Trends Research, NIM, fabian.buder@nim.org

- Dr. Matthias Unfried, Head of Behavioral Science, NIM, matthias.unfried@nim.org

Contact