The Illusion of Free Choice in the Age of Augmented Decisions

Fabian Buder, Koen Pauwels and Kairun Daikoku

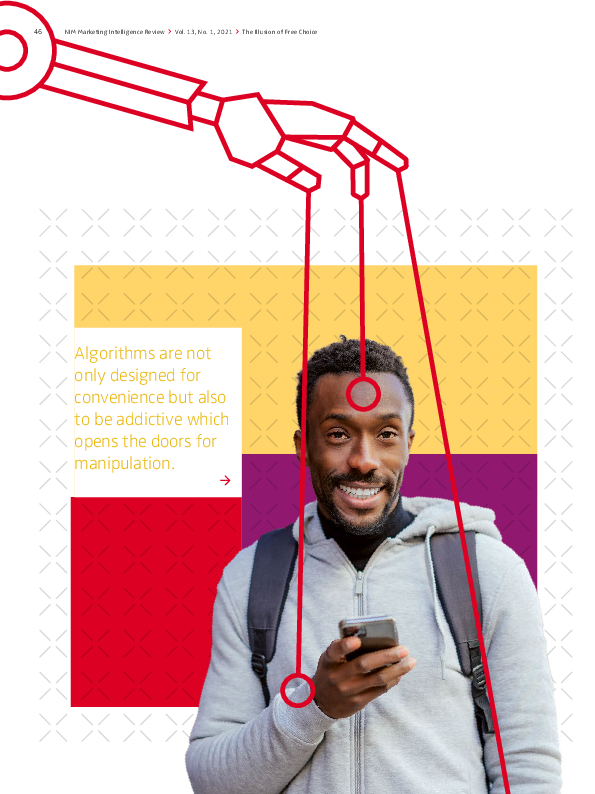

In our augmented world, many decision situations are designed by smart technologies. Artificial intelligence helps reduce information overload, filter relevant information and limit an otherwise overwhelming abundance of choices. While such algorithms make our lives more convenient, they also fulfill various organizational objectives that users may not be aware of and that may not be in their best interest. We do not know whether algorithms truly optimize the benefits of their users or rather the return on investment of a company. They are not only designed for convenience but also to be addictive, and this opens the doors for manipulation. Therefore, augmented decision making undermines the freedom of choice. To limit the threats of augmented decisions and enable humans to be critical towards the outcomes of artificial intelligence–driven recommendations, everybody should develop “algorithmic literacy.” It involves a basic understanding of artificial intelligence and how algorithms work in the background. Algorithmic literacy also requires that users understand the role and value of the personal data they sacrifice in exchange for decision augmentation.

![[Translate to English:] [Translate to English:]](/fileadmin/_processed_/9/0/csm_2021_1Licht_ins_Dunkel_4f1312736f.png)

![[Translate to English:] [Translate to English:]](/fileadmin/_processed_/e/e/csm_wertenbroch_vol_13_no_1_de_0_b4b4446d15.png)

![[Translate to English:] [Translate to English:]](/fileadmin/_processed_/e/6/csm_Algorithmen-basierte_Werbung_d9b3d65173.png)

![[Translate to English:] [Translate to English:]](/fileadmin/_processed_/3/7/csm_kuebler_pauwels_vol_13_no_1_de_0_683618de8a.png)

![[Translate to English:] [Translate to English:]](/fileadmin/_processed_/c/5/csm_thomaz_vol_13_no_1_de_a076447ee0.png)

![[Translate to English:] [Translate to English:]](/fileadmin/_processed_/c/e/csm_Jung__aber_nicht_naiv_55c440f5cd.png)